The AI-Enhanced Product Manager: 9 Google Workflows to Supercharge Your Strategy

- Tharun Poduru

- 7 days ago

- 11 min read

Updated: 4 days ago

Introduction

Welcome! If you are joining after my presentation at Santa Clara University last night, visiting from LinkedIn, or just exploring the latest in AI and stumbled upon this post, I am glad you are here.

Last night, I had the opportunity to share a few workflows I use to manage the complexity of modern product development. I was immediately asked by my peers for the specific tools, links, and prompts I used. But a simple email wouldn't do justice to these dynamic tools. So, I decided to document the full workflows here.

Fair warning: this is a comprehensive post. I wanted to create a single, detailed resource that you can bookmark and refer back to as you experiment with these tools yourself.

In the field of Product Management, the job is often defined by the "Gap of Ambiguity." This is that frustrating space between writing a requirement in a PRD and waiting weeks to see if the engineering output matches the vision. The central theme of my presentation was simple. That gap has collapsed.

With the latest suite of tools from the Google ecosystem, specifically Gemini Canvas, Opal, and NotebookLM, we can now research, prototype, test, and strategize at the speed of thought. Below is a breakdown of the 9 specific workflows I demonstrated.

Table of Contents

Rapid Prototyping & Integration (Gemini Canvas)

One of the biggest bottlenecks in product management is the time it takes to go from a written requirement to a visual proof-of-concept. Usually, this involves sketching on a whiteboard, waiting for a designer, or struggling with wireframing tools.

I wanted to show how Gemini Canvas changes this dynamic by acting as both a coding partner and a design engine.

Workflow #1: The 30-Second Prototype

The Use Case: Visualizing a Complex Agent Assist Dashboard.

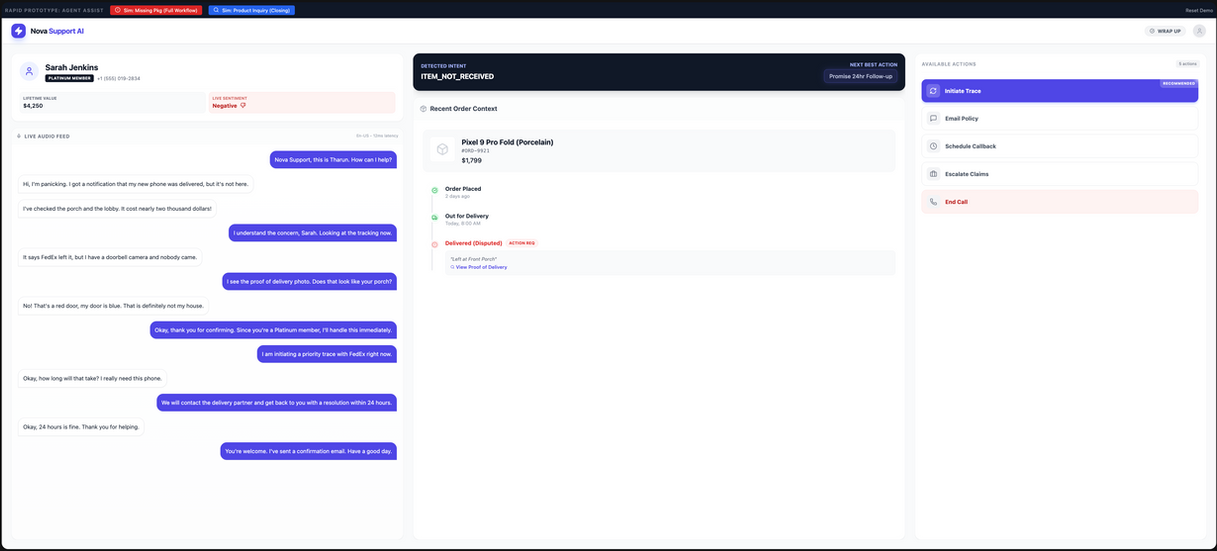

To demonstrate this, I used a concept called "Nova Support AI." The goal was to build a real-time agent assist platform that analyzes voice intent and sentiment to surface the "Next Best Action" for support staff.

The Workflow: Instead of writing a ticket for a developer, I uploaded a product description and prompted Gemini:

"Create a fully functional, interactive React mock-up for Nova Support AI. It should handle live transcription, detect customer sentiment, and surface context-aware actions like 'Initiate Trace' or 'Apply Discount'."

Pro Tip: The quality of the output depends on your input. If you don't have a detailed PRD, you can actually ask Gemini to write one first! A prompt like "Expand this 3-sentence idea into a detailed Product Definition" works wonders as a starting point.

The Result: Gemini rendered a professional, split-screen interface. It wasn't just a static image; it was a live simulation. I could see the "Live Audio Feed" transcribing in real-time, the sentiment score shifting, and the "Available Actions" panel updating dynamically based on the conversation. Mock App Link: Nova Support

Workflow #2: Iterative Refinement (The "Just Ask" Method)

The Use Case: Rapid UI experimentation.

The next challenge is often aesthetic or accessibility preferences. A stakeholder might ask, "This looks too bright for our night-shift agents. Can we see a high-contrast version?"

Traditionally, refactoring a UI for dark mode involves changing CSS variables across multiple files. With Canvas, I simply asked:

"Make the entire application dark themed."

The Result: Gemini instantly inverted the color palette, adjusted the contrast ratios for readability, and updated the entire UI to a sleek dark mode—without breaking any of the underlying logic. Mock App Link: Nova Support (Dark Theme)

Workflow #3: Zero-Latency A/B Testing

The Use Case: Validating user preference without deployment.

Now I had two fully functional versions of the application:

Version A: The original Light Mode dashboard.

Version B: The new Dark Mode variant.

Since Gemini generates a unique public link for every version, I essentially had an A/B testing environment ready in seconds. I could send both links to a user research group and ask, "Which interface allows you to scan the 'Next Best Action' faster?"

This allows us to validate design decisions based on interaction, not just imagination, all before a single line of production code is written.

By using these workflows, we move from "I think this might work" to "I know this works" in minutes, not weeks. It shifts the conversation from subjective opinions on abstract requirements to objective decisions based on tangible interactions. The risk of building the wrong thing plummets because you have validated the experience before engineering has written a single line of code.

Visual Synthesis & Communication (Gemini 3 / Nano Banana Pro)

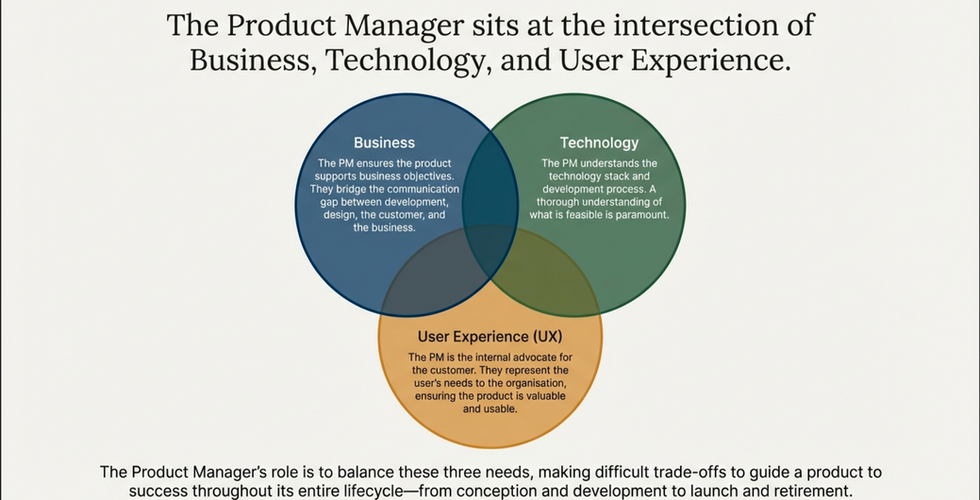

A massive part of the Product Manager role is communication. Teams often struggle to align engineering, design, and marketing around a shared mental model. A dense PRD or a long email frequently leads to more confusion rather than clarity.

We all know a picture is worth a thousand words. But until recently, AI struggled to generate images with legible text or complex diagrams. With the release of Nano Banana Pro (the internal name for Google's latest Gemini 3 image generation model), that limitation has largely vanished.

Here is how I used it to turn abstract concepts into concrete visuals.

Workflow #4: The "Visual Synthesizer"

The Use Case: Converting dense technical documentation into a digestible format.

We have all been there. You have 5 tabs open with technical documentation, release notes, and API specs. You could read them all line-by-line, or you could ask AI to summarize them. But text summaries can be dry and hard to recall.

I decided to try something fun. I fed Gemini two dense announcement and technical links about the new Gemini 3 model and gave it a simple visual challenge:

"Convert all the Knowledge you have about Gemini 3 into an image of a classroom whiteboard."

The Result: Instead of a bulleted list, it generated a photorealistic image of a whiteboard. But look closer. The text is actually readable. It organized the information into "Core Features," "Developer Focus," and "Future Ecosystem," complete with little diagrams and boxes. It turned a reading assignment into a visual study guide.

Workflow #5: The Instant Infographic

The Use Case: Creating educational assets for stakeholder alignment.

One of the most common questions in our field is the difference between the different "PM" roles. Product vs. Project vs. Program. What is the actual difference?

I used AI to not only define them but to visualize them side-by-side for clarity.

The Workflow:

Research: I asked it to "Create a detailed guide of all the different types of PMs."

Visualize: Once it had the definitions, I simply prompted: "Generate a guide image."

The Result: It created a clean, professional infographic that perfectly broke down the three distinct pillars. It visualized Product Management as the "What & Why," Project Management as the "How & When," and Program Management as the "Coordination." This is the kind of asset you can drop into a slide deck to instantly align a room.

Workflow #6: The Physical Product Prototype

The Use Case: Rapid iteration for physical goods.

Product Management isn't just about software. Whether you are designing shoes, furniture, or hardware, the need to visualize a "V2" based on user feedback is universal.

I wanted to show how AI compresses the design cycle for physical products using the Nike Zoom Vomero Roam.

The Workflow: This was a two-part process that combined deep research with creative generation.

The Research & Design: I asked Gemini to read reviews of the current shoe, identify user complaints (traction, weatherproofing), and generate an image of a "V2" that addressed these issues, presented like a product magazine.

The Iteration: Just like in software, physical products go through color and material iterations. I followed up with: "Can you change the color to Black and Red like the Atoms 251?"

The Result: Gemini generated a magazine-quality concept image with callouts pointing to the new "Weather-Sealed Knit Gaiter" and "Vibram Outsole." When I asked for the color change, it instantly pivoted the design while keeping the lighting and textures consistent.

These workflows demonstrate a shift in how we can communicate. We no longer have to rely solely on text or wait for a graphic designer to visualize a concept. Whether it is explaining a technical architecture, infographics, or mocking up a physical product, we can now generate high-fidelity visual aids in seconds. This allows us to align stakeholders faster and with greater clarity.

Building Strategy Tools (Google Opal)

We often talk about "AI apps" as these complex systems that engineers build. But Google's new tool, Opal, flips that script. It allows you to build "mini-apps" just by describing what you want them to do.

This is a massive unlock for Product Managers. Instead of waiting for an internal tool to be built to analyze competitor pricing or summarize feedback, you can just build it yourself before your morning coffee is finished.

Here is how I used Opal to automate strategic analysis.

Workflow #7: The "App-in-a-Minute"

The Use Case: Building bespoke strategy tools without writing code.

To demonstrate the speed of this tool, I decided to build a Business Model Canvas Generator live.

The Workflow: I didn't write a single line of Python or JavaScript. Instead, I opened Opal and dragged four simple blocks onto the canvas:

User Input: A simple box to enter a "Product Name."

Deep Research: I connected the input to a "Gather Product Insights" block powered by Gemini 2.5 Flash, instructing it to research everything about the product's business model.

Analysis: I fed that research into a "Generate Business Model" block, which parsed the data into the 9 classic building blocks (Value Prop, Key Partners, Cost Structure, etc.).

Rendering: Finally, I asked it to "Render a Business Model Canvas Webpage" using HTML/CSS so it looked professional.

The Result: I typed in "iPhone Air" as a hypothetical product test. In under 30 seconds, the app researched the current smartphone market, inferred Apple's likely strategy for a "Slim" phone, and generated a fully formatted, interactive Business Model Canvas webpage.

Pro Tip: While I built this flow manually by dragging nodes, you don't have to. Opal has Gemini integrated directly into the canvas. You can simply type "Create a workflow that takes a product name and generates a Business Model Canvas," and it will construct the entire node structure for you automatically.

Workflow #8: The Strategic Analyst (Agentic Logic)

The Use Case: Automating deep-dive product strategy and recommendations.

After building the simple Business Model Canvas tool, I wanted to push Opal further. Could we build a tool that doesn't just summarize data but actually thinks like a strategist?

I built a "Product Management Recommendation Engine" that adapts its analysis based on your specific goal.

The Workflow: This workflow involved a "Brain" step that makes autonomous decisions.

The Inputs: I created fields for the "Product Name" (e.g., Google Pixel Watch 3) and the "Goal" (e.g., "Planning for the next version").

The Agentic Step ("Select Framework"): This is the magic. I provided the AI with a structured library of over 50 distinct frameworks, organized into 7 logical groups—ranging from Strategy & Market Analysis to Launch & Lifecycle. I instructed the Agent to evaluate my specific goal and strictly select the single most relevant group. Based on my prompt, it zeroed in on Group 4: Evaluation, Forecasting & Prioritization, automatically deploying tools like the RICE Scoring Model and MoSCoW Prioritization while filtering out the noise.

The Deep Dive: It then conducted live web research, applied those specific frameworks, and synthesized a "Recommendation Report."

The Result: The tool generated a comprehensive HTML report for the Google Pixel Watch 4. Instead of generic advice, it provided a rigorous prioritization analysis using the MoSCoW method and a Risk/Payoff Matrix, helping me quantitatively decide which features (like multi-day battery) should actually make the cut for the next generation.

These workflows transform the PM from a "data gatherer" into a "decision architect." You are building a system that knows how to think about a problem. Instead of spending 3 days reading reviews and picking frameworks, you spend 3 minutes building a tool that does it for you forever.

The Knowledge Engine (NotebookLM)

The final tool is one I didn't get to demonstrate live in class, but it might be the most powerful one for personal productivity. For anyone stepping into product management, the sheer volume of reading material can be overwhelming. We are constantly bombarded with market reports, competitor analyses, technical docs, and even class notes.

I wanted to show how we can turn this "information overload" into an advantage by using any source material you have.

Workflow #9: The "Universal Translator"

The Use Case: Instantly converting raw research into every possible format (Podcast, Video, Slides).

I created a "Product Management Master Notebook" and added 16 different source links and PDFs regarding product management best practices.

The Workflow: I didn't just chat with the documents. I used NotebookLM's multimodal generation features to transform this dry text into engaging content types:

The Podcast (Audio Overview): I clicked one button to generate a "Deep Dive" conversation. It created a realistic banter between two AI hosts discussing the nuances of Product vs Project management. You can literally send it some content and listen to a podcast about those topics while driving to work.

The Video Overview: I took it a step further and generated a Video Overview. This creates a dynamic video summary where the AI hosts guide you through the core concepts visually. It is perfect for when you are a visual learner but don't have time to read the full text.

The Slide Deck: I asked it to "Create a Slide Deck," and it structured a presentation with bullet points and speaker notes based on the source material.

The Visuals: I even asked it to generate an infographic summarizing the key "Pillars of Competency" found in the text.

The Result: It turns information consumption from a chore into a choice. Instead of forcing yourself to read a 50-page PDF, you can listen to it as an audio overview or watch a quick video summary. Instead of spending hours outlining a deck, you can have the AI draft the narrative structure for you. Notebook Link: The Essence of Product Management

These capabilities represent a fundamental shift in how we process information. It allows us to adapt the content to our context, rather than forcing ourselves to adapt to the content. Whether you are commuting to class or preparing for an interview, you can turn your research into a format that works for you right now.

The Horizon: What is Coming Next?

While the 9 workflows above are things you can use today, the landscape is shifting under our feet. Just this week, we have seen announcements that hint at where we are going next.

If you want to see where the industry is heading, keep an eye on these three emerging tools:

Google Pomelli: Think of this as "Marketing on Autopilot." It is an experimental tool that scans your website to create a "Business DNA" profile, then generates on-brand marketing assets (posts, ads, copy) at scale. For anyone working on Growth or GTM strategies, this could automate the entire asset creation pipeline.

Google Flow (with Veo 3): We used to create static mockups. Now, with Veo 3, we are generating high-fidelity video with sound. Google Flow takes this a step further, acting as a dedicated workspace to edit and stitch these AI-generated clips together. Imagine prototyping a 30-second commercial for your product without hiring an agency.

Google Antigravity: This is the big one for the technical side of product development. It is an "AI-first" IDE where you don't just write code; you manage agents. You give it a mission ("Build me a landing page"), and the agents plan, code, and test it autonomously. It shifts the role from "Coder" to "Mission Control."

Conclusion

Looking back at these 9 workflows, one thing is clear: the role of the Product Manager hasn't changed, but the speed at which we can execute has exploded.

We still need to define the "Why" and the "What." We still need to have empathy for users and align stakeholders. But the friction between having an idea and showing that idea is disappearing.

Need a prototype? Just ask Canvas.

Need a strategy tool? Build it in Opal.

Need a visual concept? Generate it with Gemini.

I focused heavily on the Google ecosystem in this post because that is what I use daily and what we have easy access to. But the world of AI for Product Management is vast. There are incredible tools from OpenAI, Anthropic, Microsoft, and hundreds of startups that are solving specific niche problems.

Don't just take my word for it. Google these products. Watch the demos. Try them out. The modern product manager isn't defined by the specific tools they use, but by their curiosity to explore them.

Want to dive deeper into any of these workflows? I’d love to hear how you are using AI in your own journey. Connect with me on LinkedIn and let’s continue the conversation. If you found these workflows helpful, I invite you to read my other Product Management Blogs where I explore the intersection of strategy, technology, and user needs. You can also check out my Projects section to see how I have applied these principles to build full-stack applications.

Comments